|

■

Home ■ site map |

|||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||

|

|

BLOG: June 2010 - December 2013 III - ALTERNATIVE TESTS FOR BREST CANCER SCREENING 6. Physical breast exam

The 2009 USPSTF recommendations stating that there is no sufficient evidence for the benefit of clinical breast examination (CBE), and that clinicians shouldn't teach women to perform breast self-examination (BSE), came as a surprise to many. For decades, physical breast exam - CBE and BSE - was promoted as a major weapon in the proclaimed war on breast cancer (BC). Used complementary to the screening X-ray mammography in earlier detection, it presumably contributed to the assumed benefits of less invasive treatment and reduced BC mortality. No one doubted their value; it was only assumed that they are not as good as mammography. Then, incidentally just as new research started implying questionable benefits-to-risks ratio of the standard X-ray mammography screening, new notion surfaced, picturing physical breast exam (PBE) as not a viable screening option, and claiming there is no sufficient evidence to support its efficacy in that role. It is becoming the dominant view in what most people see as competent organizations and institutions, but didn't entirely take over yet. So, as of 2009, annual CBE for ages 40+ is recommended by the American Cancer Society (ACS), but not by National Cancer Institute (NCI), American Medical Association (AMA), American Academy of Family Physicians (AAFP), American College of Physicians (ACP), nor World Health Organization (WHO). Somewhat more of an agreement there is for the use of CBE for periodic evaluation, on a more individualized base, with which all of these organizations generally agree, except one (WHO). The United States Preventive Services Task Force (USPSTF) maintains that the evidence is insufficient to justify either pro or against recommendation. And the WHO is recommending not to use it. As for the BSE, it is recommended only by AMA, recommended against by WHO, and undecided upon ("insufficient evidence") by the rest of these organizations. What is the evidence actually telling us about the efficiency of the physical breast exam? The answer may vary, depending on who you ask. An interesting -and certainly familiar - pattern is that the evidence tends to be selectively used and/or interpreted based on reviewer's preference, belief, or just current prevailing view. From the beginning of the mammography era, both CBE and BSE were nearly unanimously promoted as effective in early detection, and supporting evidence was there. Early studies from the 1970s found not only that BSE can be effective as screening tool (Foster et al. 1978), but also that it could reduce BC mortality by 19-24% (Greenwald et al 1978). It is documented that there was significant reduction in the size of presenting cancer before the introduction of mass mammography screening (Cady et al. 1993), which could only be credited to the physical exam. A 1988 meta-study published in the British Medical Journal (Self examination of the breast: is it beneficial?, Hill et al.), covering 12 studies with 8118 breast cancer patients, found that women practicing BSE had 36% lower risk of having cancer spread to lymph nodes at the time of diagnosis, and 44% lower risk of having tumor larger than 2cm. Also, critics of the standard X-ray mammography tend to point at the evidence supporting BSE effectiveness, such as Japanese study finding it reduces breast cancer mortality risk by 38% (Effectiveness of mass screening for breast cancer in Japan, Kuroishi et al. 2000), or the well known Canadian randomized controlled trials, which found that CBE complemented with BSE was

as

efficient with respect to breast cancer mortality On the other hand, those claiming no evidence for the effectiveness of physical breast exam, tend to ignore evidence to the contrary, or problems with the supporting evidence they use. For instance, the 2009 USPSTF recommendations cite "insufficient evidence" to assess "additional benefits or harms" of CBE "beyond" X-ray mammography, while recommending against BSE use (Screening for breast cancer: U.S. Preventive Services Task Force recommendation statement). This wording, however, conceals that the only solid piece of evidence among several it cited for CBE - the two Canadian studies - clearly suggest that CBE screening, combined with BSE, is as effective as X-ray mammography in reducing breast cancer mortality. Research cited in this USPSTF report in support of its conclusion of the lack of evidence for the benefit from practicing BSE is: - two recent randomized controlled trials in China (Randomized trial of breast self-examination in Shanghai, Thomas et al. 2002) and Russia (Results of a prospective randomized investigation [Russia (St.Petersburg)/WHO] to evaluate the significance of self-examination for the early detection of breast cancer, Semiglazow et al. 2003), - one observational study (Breast self-examination: self-reported frequency, quality, and associated outcomes, Tu and al. 2006), - one meta analysis (Breast examination and death from breast cancer, Hackshaw and Paul, 2003) and - two reports: one by the Nordic Cochrane Center (Regular self-examination or clinical examination for early detection of breast cancer, Kosters and Gotzsche, 2008), and the other by the Canadian Task Force on Preventive Healthcare (2001 update: should women be routinely taught breast self-examination to screen for breast cancer?, Baxter et al.). The center piece are the two randomized trials in China and Russia. In the report, there's no mention of their overall quality, or possible result inconsistencies - merely presenting the results that do not find evidence of BSE benefit. However, in the USPSTF's preliminary report (Screening for breast cancer: Systematic Evidence Review Update for the U. S. Preventive Services Task Force, Nelson et al. 2009) we can see some relevant "details" omitted from the final report, and they bring up serious doubts about reliability of these two studies. It says that the Russian study, ranked in that report as of "fair quality", had all kind of problems: ∙ Moscow branch was unfolding so poorly that it was abandoned ∙ St. Petersburg branch had compliance in the BSE group fall to only 18% within four years, and even after refresher courses it only rose to 58%; it was probably still lower, because it was self-reported ∙ St. Petersburg's all-cause mortality was 7% higher in the BSE group (95% CI 0.88-1.29), strongly indicating baseline imbalance between the two groups ∙ number of women in the study varies from one report to another by as much as 3,438; no explanation offered ∙ number of women with benign biopsies and those diagnosed by breast cancer do not add up to the reported number of diagnostic biopsies ∙ blinding to the status (i.e. group), including cause-of-death assessors wasn't described, and probably wasn't done In addition, we also know that women with previous breast cancer or other malignancies were excluded, but no numbers were given for each group; unknown number of women from each group was lost due to migration; unknown number of women from the control group had clinical breast examination; unknown number of women was not in the age group 40-64 when entered the study; a year into the study an internal test showed only 10% of women performed palpation by concentric movements correctly, 15% palpation with three fingers, and 58% palpation using finger pads (Regular self-examination or clinical examination for early detection of breast cancer, Kösters and Gøtzsche, 2003). On top of that, as any observational study, where the degree and quality of compliance in the intervention (here BSE) group is determined based on self-reported data, it is inherently less reliable than controlled studies, and considered lower-quality evidence. It is also important to tell that the study was designed to determine effectiveness of BSE added to a yearly clinical breast exam (CBE) as "usual care", i.e. that the intervention group had BSE alone and the control group CBE+BSE. Most comments omit to mention this, creating impression that the study result implies BSE not having any effect at all. When do we say "Enough is enough - the study just cannot be considered reliable!"? How poor, overall, a study needs to be for that? The Chinese study was rated "good" by the USPSTF, but how good was it? It is generally agreed to that it was better designed, and with better compliance rate than the Russian study. Intervention group practiced BSE, and control group - at least in the design - didn't have any breast screening. Yet, the rate of detected breast cancers was nearly identical for the BSE and control group (3% lower in the former), while it was - as expected - significantly (24%) higher in the BSE group in the Russian trial. This cannot be excluded as indication of a possible, or rather probable

poor effective compliance in the Chinese

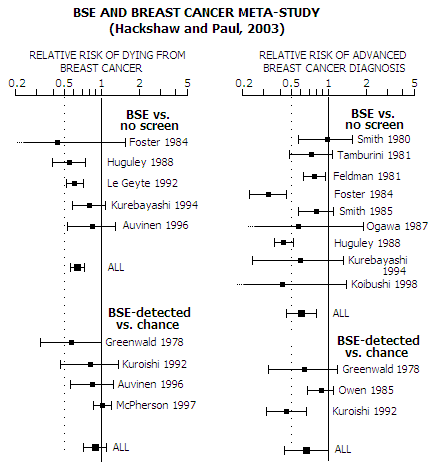

study and/or The rate of small tumors (below 2cm) detected was practically identical in BSE and control group - another indication of low effective compliance - including improperly practiced breast exam - in the BSE group. As with the Russian study, the exact randomization mechanism is not described, thus it cannot be accessed how comparable were the two groups. Over 23,000 women (about 8% of the total) were excluded after randomization, nearly half because they couldn't be located, and most of the rest due to migration. In all, over 3600 more were excluded from the intervention group. Well over twice more (3656 vs. 1331) were excluded from the intervention group due to refusal to answer questionnaire, which indicates randomization problem in securing balanced effective compliance. Contamination (BSE practicing) in the control group was estimated at 5%, mainly due to movement of women from factories assigned to the intervention group to factories assigned to control group. If accurate, it is negligible, but the question is how accurate it is. Again, the main problem is that self-reported data leaves plenty of room for significant uncertainties. The attendance rate for the individual practice sessions in the intervention (BSE) group fell sharply from over 90% in 1989-91 to below 50% in 1995, the fall being attributed mainly to the "changes associated with economic reform". All-cause mortality was as much as 10% lower in the intervention group, opening both, question of randomization quality and of possible cause-of-death bias (e.g. other-causes deaths classified as breast cancer deaths in the intervention group, and/or breast cancer deaths classified as other-causes deaths in the control group). Again, as an observational study, by itself second-rate evidence even without indication of bias or inadequacy, the Chinese trial cannot be seen as the decisive evidence with the above uncertainties and indications of inadequate quality. Yet it was the best piece of evidence in the USPSTF report concluding that there is "no evidence" of benefit from practicing BSE. Is the USPSTF's conclusion a bit biased? The disconnect between actual evidence and USPSTF's finding is even better illustrated with another piece of evidence they refer to, a study by Hackshaw and Paul (Breast self-examination and death from breast cancer: a meta-analysis, 2003).

USPSTF report states: "Published meta-analyses of BSE randomized trials (59-61) and non-randomized studies (59-61) also indicate no significant differences in breast cancer mortality between BSE and control groups.", citing as references Hackshaw et al. (59), Baxter et al. (60, the Canadian Task Force report, addressed ahead) and Kosters and Gotzsche (61, based on the Shanghai and St. Petersburg trials). We've already seen that the Russian and Chinese trials cannot be considered to be good evidence. It is also obvious that studies analyzed in Hackshaw et al. show clear pattern of benefit from practicing BSE with respect to both, reduced breast cancer (BC) mortality (36% reduction for BSE vs. no-screen group), and reduced risk of advanced breast cancer at diagnosis (40% reduction for BSE vs. no-screen group, and 34% reduction for BSE-detected vs. chance-detected breast cancer). BC mortality reduction for BSE-detected vs. chance-detected breast cancer is the statistically insignificant 10%, mostly due to the statistical leveraging based on the number of participants. This formal criterion, which gives more weight to larger studies in proportion to the ratio of group size squared (i.e. in proportion to the inverse of the variance), favors McPherson et al, a likely flawed study indicating 6% greater mortality for the BSE population - very unlikely direction, in contrast with literally all other studies. In all, what Hackshaw et al. really indicates is that the benefit of practicing BSE is at least as significant as of the standard mammography screening, and probably greater. Similarly, the Canadian Task Force on Preventive Health Care finds no evidence of benefit from BSE, but does find evidence of harm, to recommend against it (Should women be routinely taught breast self-examination to screen for breast cancer?, Baxter et al. 2001). As with the USPSTF, bulk of their evidence comes from two recent large randomized uncontrolled trials in Russia and China, as well as "quasi-randomized" (nonrandomized) controlled UK trial (UK Trial of Early Detection of Breast Cancer Group, 1988), none of which did show benefit from BSE. In addition, it selects five case-control and cohort studies that satisfy its criteria. Three of them actually found significant benefit of practicing BCE (Harvey et al., nested in the Canadian Breast Cancer Screening Study - CNBSS - randomized controlled trial, Newcomb et al. and Gastrin et al.), one is inconclusive (Muscat et al. 1991) and the last one is a cohort study from the 1980s (Holmberg et al.). Yet, the authors brush aside the positive results, and go on to the final conclusion of no evidence supporting possible benefit from practicing BSE. Responding to this manner of "making case", authors of the Canadian nested study objected that Baxter et al.: ∙ only used data from one part of the Russian study, before it was evaluated for proper randomization ∙ omitted that the BSE study nested in the CNBSS found that BSE is effective is properly practiced ∙ dismissed significant positive results of a Finish study citing "selection bias" due to the higher cohort education level than in the general population

Another critic (Ellen Warner, Associate Professor of Medicine their results are inherently unreliable. Yet another review of this Canadian Task Force report, as well as that of USPSTF, both sending the same message of "no sufficient evidence of BSE benefit", brings up the fact that undue significance was given to the Chinese study, which at the time

had only 5 years of follow up, and as

few as The review points out that in such a limited period the standard X-ray mammography screening would likely even show negative effect ("mortality paradox"), and that, considering an extended period from BC detection to death, BSE started at the beginning of the study would likely make little difference in this respect even if it is effective longer term (Is it time to stop teaching breast self-examination?, Nekhlyudov and Fletcher 2001). It also points at the weaknesses of the Russian study: lack of individual BSE training, design problems, and poor compliance with BSE. In addition, it questions whether the relatively high rate of false positives in the BSE group vs. non-screened group of these studies is relevant for the North America environment, where BSE is often practiced as a part of the "screening triad", with CBE and X-ray mammography. They cite the data from their own clinical setting of such type, where "among 2400 women followed for 10 years, 196 patient-identified breast masses (either by BSE or accidentally), 402 clinical breast examinations and 631 mammograms led to additional evaluation". In other word, BSE very likely had lower false-positive rate than CBE and standard mammography, and nearly certainly not higher. On top of that, it also implies that the Russian and Chinese studies did not elaborate the effectiveness of treatment applied to breast cancer patients. An ineffective, or significantly less effective overall treatment than in the U.S.,

would negate any advantage of

earlier cancer detection Similarly, they found that the U.K. trial, used as evidence source in the Canadian Task Force report, is inconclusive due to the "differences in BSE teaching and breast cancer treatment in the 2 study districts". Wow! Why were these major U.S. and Canadian health agencies - supposedly independent and objective - in such a rush to present only one side - the one promoting the agenda of BSE ineffectiveness - of pretty inconclusive evidence on BSE effectiveness as definitive, while entirely omitting to consider all that doesn't fit in such conclusion? The only logical explanation is that, again, we see the result of the mighty backstage influence of those having their profits and/or careers tied up with the standard X-ray mammography screening, wanting to sideline the potential competitor whose strengths relative to the standard mammography increased with its dramatic benefit-to-risk ratio erosion following results of new research since the year 2000. In fact, we can say that the evidence on the effect of physical breast exam - either clinical breast exam (CBE) or breast self-exam (BSE) - that we can rely on,

clearly indicates

that they do have beneficial effect in both, For comparison, following table summarizes the approximate range for the three main efficacy indicators - sensitivity, specificity and positive predictive value - for the standard X-ray screening mammography, clinical breast examination and breast self-examination. It shows USPSTF 2009 data but also the figures based on all available research next to it under "Actual"; the "Approximate" for the BSE is a CBE-data-based estimate, due to the lack of data for the former. These are not full ranges, rather approximate ranges that should be covering great majority of women (note that these figures do not account for overdiagnosis; the effect is illustrated on the USPSTF data table).

References are given below the top (indicator) portion with the values for three

main indicators of test sensitivity. They

are followed by an overview of the main negatives of these

three BC screening modalities. False negative and positive rates

are determined by the sensitivity and specificity figures,

respectively, and biopsy rate is based on the most reliable source,

the Canadian study (CNBSS 2).

*Dual USPSTF mammography ranges as implied by its report Sure, these numbers are not like anything you probably saw as representative of the efficacy of these three tests. But it is what the relevant evidence suggests: X-ray mammography screening has no advantage over clinical breast examination (CBE) with respect to an overall test efficiency, but does fall behind it for women with dense breasts and/or high risk of getting breast cancer (BC). It also comes with significantly more negatives: overdiagnosis risk, radiation risk, higher biopsy rate and risks of breast tissue compression and pain. Breast self-exam (BSE) is not a screening test that anyone promotes as the primary one, but for more than a few women - even in the U.S. - who can't afford or don't have access to mammography and/or clinical breast exam, it is the only option. Its figures are approximated quite arbitrarily, based on the CBE figures and assumption that a properly performed BSE by the average woman will be nearly - but not quite - as efficient as (properly performed) CBE. Let's look at these numbers more closely. Correct test sensitivity figures are important because this indicator is the one best understood by women: it tells the odds of having the existing BC detected by screening. The full sensitivity range for X-ray screening mammography is from near zero to near 100%, but if we limit to the sub-groups of screened population with at least some significance with respect to their relative size, it can be approximated by a 30-95% range, the low end being high-risk women with dense breast and the high end low-risk women with fatty breasts. For the mammography efficacy indicators, it is evident that the USPSTF ranges are generally narrower, but particularly so toward the lower end, creating impression of better than actual overall efficiency. Specifically, as a number of studies indicate, the lower end for mammography sensitivity is significantly below their 72% figure for a large population of women with dense breasts and/or at high risk from BC. Even the study that appears to be "damage control" in that it brings bottom test sensitivity back safely above 50% (Carney et al. 2003) puts it at 62% for extremely dense breasts (56-69% range) - ten index points below USPSTF 72% bottom sensitivity (as the average for 40-49y age group). Since about a third of all women in the 30-90y range have dense breasts (Titus-Ernstoff et al. 2006), it is a significant factor limiting mammographic sensitivity. This, however, has been very much played down and/or concealed. The other, 72-86% sensitivity range for mammography is not explicitly given in the USPSTF report, but it is implied by its mammography performance table based on BCSC data. It disagrees not only with a number of studies, but also with the performance plot from Yankaskas et al. based on - guess what - BCSC data. Evidently, this data source that should be the one most relevant for American women is not necessarily reliable, can promote bias if used selectively, and/or can be used selectively to promote desired bias. The first range for mammography sensitivity, 77-95%, explicitly given by the USPSTF as the overall range of mammographic sensitivity are, according to the reference given, based on the figures for first screen sensitivity in a five large BC random controlled trials (Malmo, Two County, Stockholm, Edinburgh and CNBSS). This doesn't pass the basic scrutiny. It is well known that first-screen sensitivity is always significantly higher than that for any extended screening period. It is also well known that trial populations are not representative of population at large. And it is well known that the European and North American contexts have significant differences in this respect. Last but not least, there is a god bit of evidence that most of the European studies (Two County, Edinburgh, Stockholm) are either unreliable or flawed. Why would USPSTF present such figures to the American women as representative of mammographic sensitivity? Guess, them being fitting into the picture that mammography industry

CBE sensitivity figures under "Actual" are entirely based on the results from the Canadian study, for 40-49y and 50-59y age group (CNBSS 1 and CNBSS 2, respectively). It is simply the only study in which care has been taken to have CBE performed properly; even in CBE trials the examination technique is typically not described, nor monitored against some defined standards, and its practice is routinely sub-standard (McDonald et al. 2004). CNBSS sensitivity and specificity figures are as reported by the authors (Physical examination. Its role as a single screening modality in the Canadian National Breast Screening Study, Baines et al. 1989). The USPSTF figures are from its 2002 report (it does not give any figures on the CBE in its 2009 report), which does include the Canadian study, but does not use a proper, direct reference to it. Instead, it references to another Baines' paper with a partial data from the original paper and the sensitivity numbers that better suit the purpose of creating impression it is generally below 70%. As mentioned, BSE sensitivity figures are quite an arbitrary estimate based on CBE figures and assumption that it will be somewhat lower, and that at best it will approach CBE sensitivity. There is quite a bit of evidence suggesting that even short CBE courses to layman enables them to achieve overall sensitivity of 60-70% (Trapp et al. 1999, McDermott et al. 1996, Campbell et al. 1994, Warner et al. 1993, and others). Similarly to the sensitivity, X-ray mammography specificity figures given by USPSTF - and we could say in most any "official" source as well - have the low portion of their lower range cut off, creating impression that the risk of having an existing BC not detected by it is significantly lower than what it can be. Likewise, the high range for mammography's positive predictive value presented by USPSTF is out of the typical range, while its low end does not extend to the actual low end level. Again, it creates impression that the odds that a positive mammography test will be correct are higher than they really are. Of course, it is possible to have mammography PPV in the 20%+ even 30%+ zone, but it requires test specificity to be at the 98-99% level (i.e. false positive rate of 1-2%, and sensitivity well below 50%), which is very unlikely and, frankly, not happening on any significant scale. Conclusions In all, what the above table suggests is that CBE is not inferior to X-ray mammography neither in its ability to detect actual BC, nor in the extent that it's being paid for by the frequency of false positive results. Of course, mammography does have significantly higher BC detection rate, but the real value of it is uncertain, in light of well documented "habit" of mammography to "detect" BC where there is none, i.e. breast abnormalities that appear as BC and are diagnosed as one, but would have never develop into the symptomatic disease. With the rate of overdiagnosis with X-ray mammography possibly exceeding 50%, the actual cancer detection rate could be over 1/3 lower (this would also somewhat lower sensitivity, while wouldn't have appreciable effect on specificity, false positive rate and PPV). The standard X-ray mammography is not superior to CBE in the efficiency, but it undoubtedly has more significant negatives, i.e. harms. It exposes the average woman to a much higher risk of being diagnosed with pseudo-BC, but also to a significantly higher overall risk from the false negative test - particularly for women with dense breasts and those at high risk from BC - as well as to about double the risk of false positive (the 2-30% range is approximately corresponding to the sensitivity range on the ROC plot based on BCSC data from Yankaskas et al. - although it would be significantly worse if following Pisano et al. on the same graph). Due to the generally smaller size of detected abnormality, mammography also have significantly higher biopsy rate. Data in the table is, again, from the Canadian CNBSS 2 trial (50-59y women), where the mammography was comparable or better to that in other random controlled trials, and CBE was performed up to the defined high standard. The risk of benign biopsy in this trial was about twice higher for mammography than CBE. It is probably even worse, since the Canadian study systematically used second readers and added examination by study surgeon before deciding whether to call test positive, or not. This had to significantly reduce the incidence of false positives, hence also biopsies, but it is not the U.S. practice. A study based on the U.S. data (BCSC) also found more than double biopsy rate for mammography vs. CBE, except for the 40-49y age group, where it was 30% higher (Elmore et al. 1998). In all, what our best evidence suggests is that, when performed up to determined high standard,

CBE is a

cancer detection test comparable in screening efficiency to Most of the higher cancer detection rate of the latter is likely to be overdiagnosis, i.e. detection of abnormal growths which are diagnosed as cancers but would have never become symptomatic. Its higher rate of detection of small (actual) cancers is partly offset by the larger cancers it misses, but are detected by CBE. In effect, as the Canadian trials implie, BC mortality is not significantly affected by adding X-ray mammography to the properly performed CBE. The imperative for successful treatment is not to have BC detected at the earliest possible stage - where mammography does score better than CBE - but to have it detected early enough. On the other hand, CBE exposes women to significantly lower risks of overdiagnosis and overtreatment, including benign biopsy, lumpectomy, mastectomy and radiation. It also does not inflict pain, nor the possible breast compression related risks, as mammography does. If properly performed, breast self-examination (BSE) can be nearly as efficient as CBE. It is only meaningful to assume all tests in comparison properly performed; otherwise, comparison does not make sense. Mammography went through a long period of standardization and technical improvement, while physical breast exam - in part probably purposefully so - was largely left without the advantage of such approach. Once more: good evidence tells us that the physical breast exam, combining both, CBE and BSE, can be as efficient as X-ray mammography as a screening tool for breast cancer, while exposing women to significantly lower risks and inconvenience. YOUR BODY ┆ HEALTH RECIPE ┆ NUTRITION ┆ TOXINS ┆ SYMPTOMS |

||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||||